Many content producers might share a similar nightmare: Unorganized piles of images and videos strewn across company drives, or…dare we say…zip files in an email chain?!

We certainly hope your content management situation isn’t as scary as sorting through zip files for the image you need. Nevertheless, any file management system that doesn’t make it easy for you to find what you’re looking for can be endlessly frustrating, and a huge waste of time.

Especially as your digital library grows, easy file retrieval and content consistency across your site(s) depend on how thoroughly you’re able to organize it all. But organizing all of your videos, images, and beyond in a way that makes everyone’s lives easier…presents a new kind of insurmountable challenge: Who has time for that?

That’s where a Digital Asset Management platform with smart tagging capabilities comes into play!

What is a Digital Asset Management platform?

To put things simply, a Digital Asset Management platform or Digital Asset Manager (DAM) is a solution designed to help keep the company’s various files organized and easily accessible by your teams.

These files might be anything you use to provide a unified user experience across your site, including images, videos, animations, and documents.

Why implement DAM?

DAMs are used far and wide by brands with large digital portfolios, and they’re essential for ensuring consistency across content.

Brands with particularly demanding file libraries need a single source of truth that all their teams can refer back to when creating new content, pages, or sites. And a properly organized DAM integrated into a brand’s CMS fulfills this need!

Tagging assets within DAM

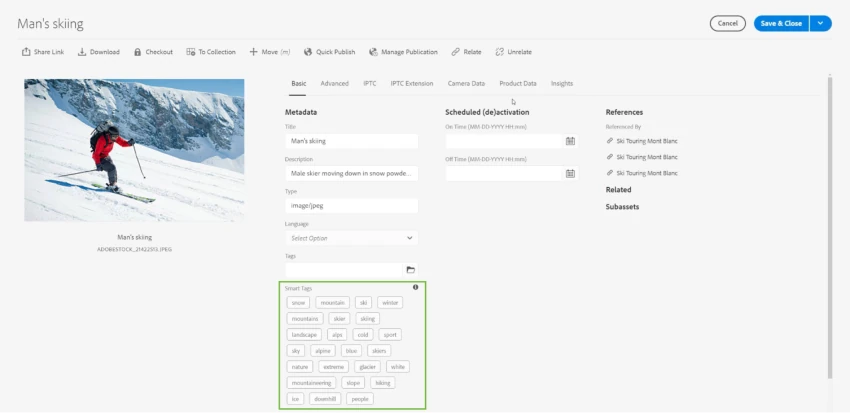

For your team to find images, videos, and more in your DAM platform, you’ll need to include tags in the metadata for each asset. These tags are keywords that help describe the file, and they enable it to appear when teams search for those keywords.

For example, let’s say your company owns an image of a woman eating spaghetti. Relevant tags for an image like this might be along the lines of: woman, eating, spaghetti, food. It might show up when your team searches “woman eating,” or simply “spaghetti.”

Inevitably, you can’t manage content (optimally) without these tags—but what if you have millions of files that need to be tagged?

Thankfully, that’s where a DAM with smart tagging capabilities comes in handy.

AEM Assets: An Advanced DAM Solution

There are many DAM solutions out there that achieve similar ends—that is, they create a centralized hub to store all of your digital property and organize it as you see fit.

Adobe Experience Manager (AEM) Assets, however, is an advanced DAM that rises a step above the status-quo, for businesses with more complex file management needs.

AEM Assets opens doors to even more tools and capabilities. Here are just a few of its many perks:

- Easy new content version creation using Photoshop, Lightroom, or even AI.

- In-depth analytics on each asset’s performance with your customers.

- The ability to define which teams can use which files and to what extent.

One of the most useful tools (by far) that AEM Assets brings to the table is the use of Adobe Sensei’s AI to apply auto-tags to new and existing files within your DAM.

This enables you to make millions of images, videos, and text-based assets searchable for all your teams with relevant tags in no time. And that’s certainly much better than spending countless hours assessing these digital properties and applying tags one at a time.

Now, while this is an incredibly powerful feature, it’s not ready to go out of the box. Let’s do a deep dive into what you can expect from the setup process!

How AEM Smart Content Service Works

AEM Smart Content Service is a feature within AEM Assets, which uses Adobe Sensei AI to learn and reproduce how you want to tag your digital properties. It relies on image recognition intelligence to evaluate your assets and decide how best to tag them.

Here’s an overview of the process, to give you a better idea of what you’ll be working with:

- You’ll upload your images, videos, or text-based files into Adobe Assets, where Smart Content Service’s AI will instantly auto-tag them.

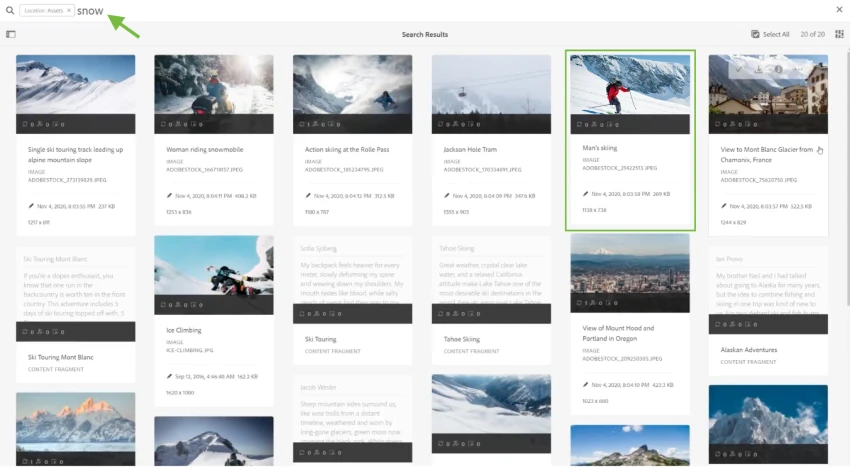

- Now, when you enter the asset console of the DAM platform, you’ll be able to type a keyword that describes the type of file you need. The console will then show you any images, videos, etcetera that have that keyword in their smart tags.

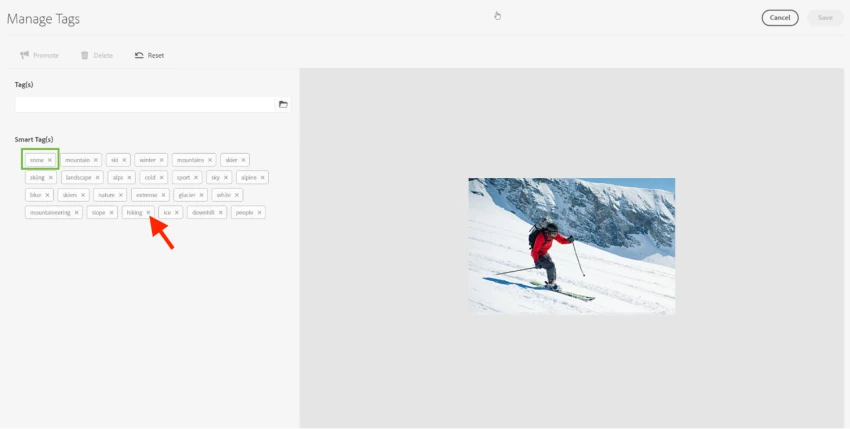

- From there, if you notice that some searches turn up files that don’t seem to match the query, you can then edit that image’s smart tags, and remove the ones that don’t seem accurate.

Training AEM Smart Content Service

Truth is, even features considered to be “smart” still need some education. Although Smart Content Service has access to a powerful image recognition AI, it won’t know how you need your digital properties to be tagged if you don’t teach it.

Your business taxonomy

Every business has a fairly unique taxonomy—meaning that every business uses specific language, descriptors, and classifications to describe the content that appears in their files.

For example, an untrained Smart Content Service may only be able to recognize a BMW motorcycle as a motorcycle. Needless to say, when all you sell are motorcycles…that’s not a helpful tag for your team.

From a business standpoint, BMW would know that when their teams are accessing files to design content, they would likely be searching for images of specific models.

In this case, your business taxonomy would be the model names you use to describe different motorcycles. And Smart Content Service wouldn’t know this on its own without learning your specific business taxonomy first.

AI generating AEM smart tags around your business taxonomy

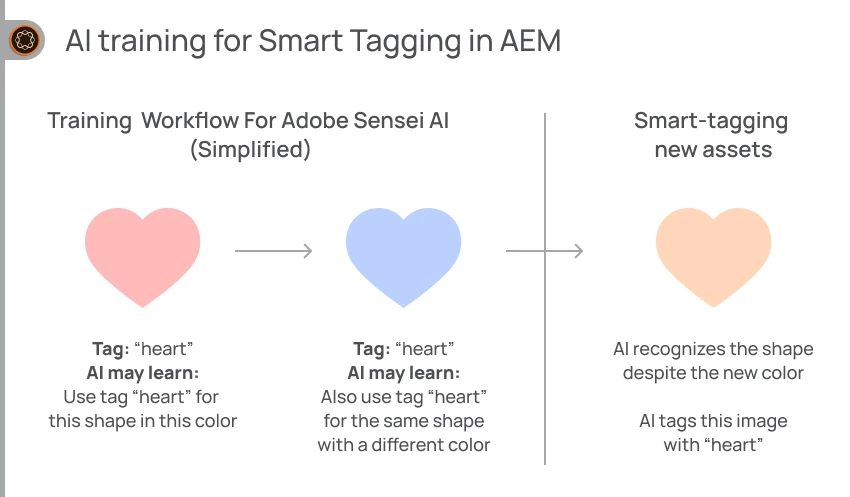

Training the AI to recognize your business taxonomy can be fairly straightforward in theory—you’ll be presenting the AI with a folder of images that all use the same tags, with some variety between visually similar images.

This is done so that the AI can learn to identify why the same tag applies to varying images. When Smart Content Service evaluates other assets after training, this knowledge will help it note those same identifiers, and apply those same AEM tags.

To effectively run a training process with your images, you’ll need a few things:

- At least 30 images for each tag.

- The visual similarity between images that share the same tag. (For instance, the same model wearing the same kind of shirt in the same pose.)

- Variety among the images that share the same tag. (For instance, the same model in the same pose wearing the same kind of shirt, but in many different colors.)

- Images with little to no distracting elements, like complex backgrounds.

- Images tagged completely with every tag that’s relevant to them in your taxonomy.

Training processes can be run as many times as you require to give your Smart Content Service a more complex understanding of your needs for AEM smart tags as they evolve.

However, once you’ve run a training process, the AI can’t unlearn what it learned in that specific process. So, make sure that before you run a training process, the tags and images you’re providing accurately represent how you want similar assets to be smart-tagged in the future.

Assets and AEM tag confidence scores

Even well-trained AIs aren’t perfect. So, when evaluating images, videos, or text-based files for smart tagging in AEM, the AI framework will also assign a confidence score to each tag.

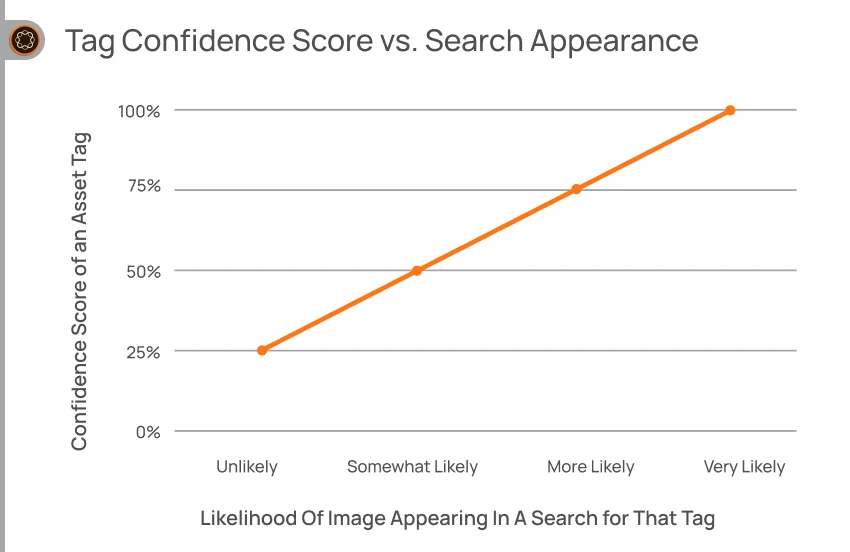

This confidence score expresses how sure the AI is that this tag is highly accurate for this asset, and it’s expressed as a number between zero and one. You can also look at the confidence score as a percentage—for example, a confidence score of 0.5 would mean that Adobe Sensei is 50% sure that this tag is relevant for the asset.

Tags with higher confidence scores will prompt the tagged file to appear more prominently in search results when looking for keywords mentioned in that AEM tag. Similarly, your files are less likely to show in search results for tags with lower confidence scores.

AEM users can also evaluate the confidence scores that the AI is assigning to tags, to confirm whether or not the tag is accurate for a given file. Doing so helps the AI continue to learn so that it can avoid placing an inaccurate tag on similar properties in the future.

The Challenges and Limitations of Smart Tagging

In most cases, smart tagging in AEM does take a tremendous amount of pressure off of brands whose digital libraries are now too large to sustain manual tagging.

However, just like any feature, smart tags in AEM do have their limitations. These limitations shouldn’t keep you from investing in AEM Assets and Smart Content Service—but it’s important to be aware of them as you navigate an optimal auto-tag setup. Some limitations for smart tagging in AEM as a cloud service and AEM 6.5 are as follows:

- The AI is more likely to inaccurately tag images of lower quality.

- The AI can’t assign tags based on abstract concepts, like an image’s associated emotions.

- The AI can’t accurately tag images based on small visual differences, like tiny logos on clothing.

- Smart tags can only be applied in the languages supported by AEM.

- The maximum number of assets you can smart tag in a year is two million.

- Only images in JPG and PNG formats can be smart-tagged.

Also, if your organization is fairly new to AEM, the implementation, and preparation of your assets can present a sizable technical challenge. You’ll want to ensure that you understand Adobe’s technical documentation for setting up AEM and AEM assets, and have the resources to accomplish this.

Conclusion

Large brands need to ensure that all their digital properties are usable and that their content teams can easily find relevant images through a simple search.

But even advanced systems like Smart Content Service don’t become easy-use tools on their own. Training Adobe Sensei to accurately tag images, videos, and text-based files relies on careful AEM smart tag configuration for more accurate AI performance. This process can demand a lot of time and dedication before you’re even able to start letting the AI do all the work.

The good news is: You and your teams don’t have to conquer this alone.

At Axamit, we make AEM feel like it was ready out of the box when you start using it—without any effort on your end. So, drop us a line to chat about getting AEM integration pressures off your plate!

FAQ

What is smart tagging, and how does it differ from manual tagging?

Smart tagging is when an AI reads assets in your DAM and auto-applies what it feels are the most relevant tags to each one. With manual AEM tagging, a human is tasked with assessing files and applying relevant tags to them one at a time.

What is the difference between metadata and tags in AEM?

In AEM, an asset’s metadata is a set of data that explains details about it (for example, its file type). A tag is saved as a kind of metadata for files in AEM. Tag functionality is specifically geared toward benefiting users, by making files searchable via the keywords used in the tags.

Can smart tagging be applied to existing content in AEM, or is it only for new content?

Yes, smart tagging can be applied to existing folders of content in AEM as well as new content.